AI-Powered Conversational Bias Research

Android app to explore the OpenAI API beyond a chatbot: a stoic, philosophical conversation space plus an advanced UI to test your ideas and reveal potential bias patterns through guided questions and sentiment cues.

This project was built as a hands-on platform to thoroughly test OpenAI API capabilities: conversation quality, moderation, and sentiment analysis. It is a research Proof of Concept (POC) with no commercial intent—focused on learning, iteration, and careful observation of how people express and refine ideas.

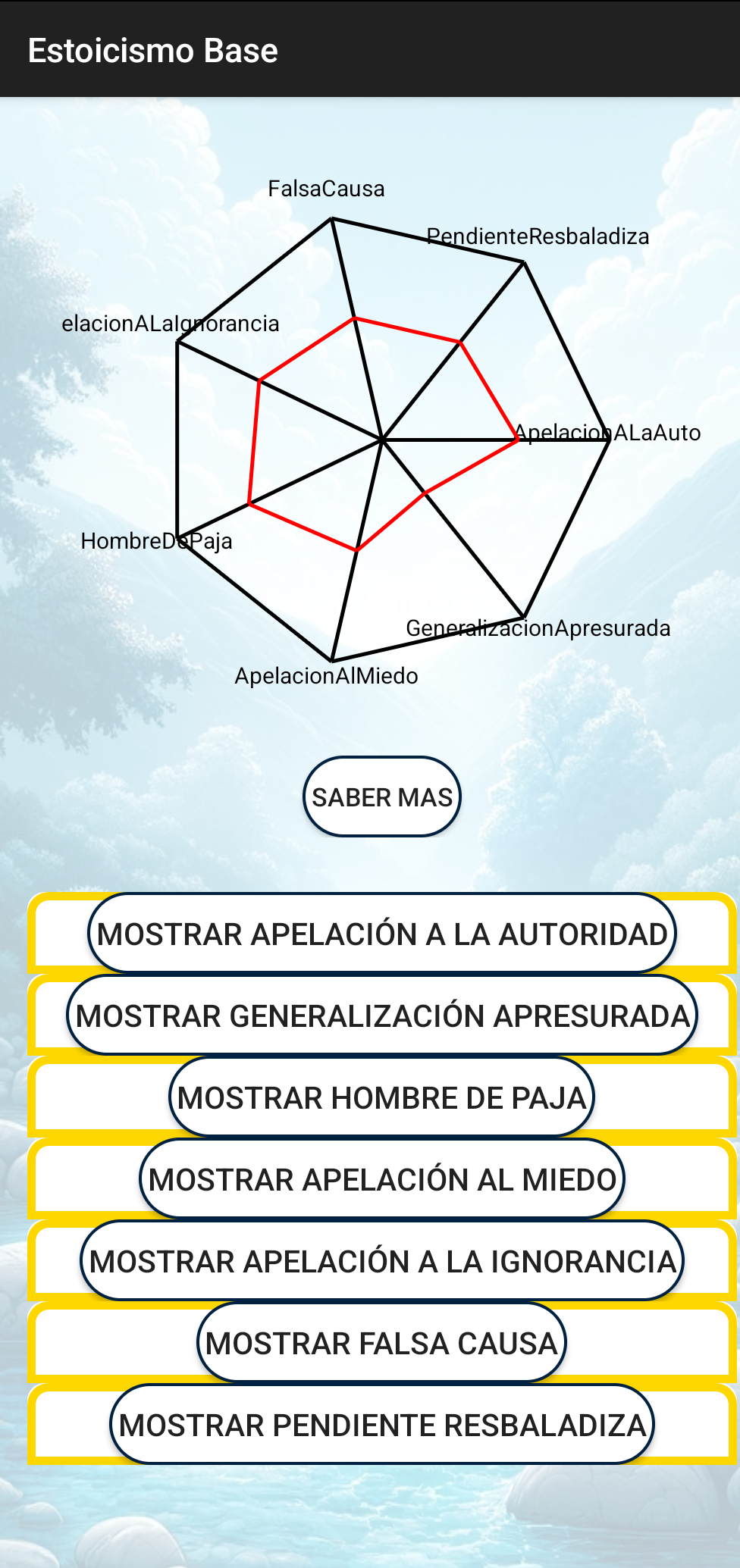

Beyond philosophical dialogue, the app includes a bias-testing module with an advanced UI. Instead of labeling users as “biased,” it guides them through questions and alternative framings—helping them notice when an opinion may be influenced by assumptions, emotional tone, or incomplete information. Think of it as: “put your ideas to the test.”

Stoic conversations + “Test your ideas”

To make conversations accessible and practical, I used Greek Stoicism as a central theme. Not as a strict doctrine, but as a friendly structure for reflection: it’s pragmatic, easy to approach, and helps people discuss emotions, decisions, responsibility, and everyday challenges without requiring deep philosophical background.

“The goal is not to judge your thinking. It’s to help you look at your own ideas from a different angle.”

The bias module is designed around a simple principle: guide, don’t accuse. It asks clarifying questions and proposes alternative formulations, while a sentiment detection layer offers suggestions to express ideas more clearly and reduce emotional distortion. The experience feels like a structured dialogue where you can pressure-test your reasoning and spot weak assumptions.

Research POC and next modules

This is a research Proof of Concept (POC). If I find collaborators or interested partners, I’m open to continuing development. Some modules already designed (conceptually and/or partially prototyped) include: moderated group conversations, an AI-assisted fallacy detector, and a more direct bias detector for 1-to-1 conversations.

If you tried the app and found it interesting, your feedback helps me refine the bias-guidance approach and improve the user experience. Even short comments—what felt useful, confusing, or missing—are extremely valuable.